We’ve all seen images online that don’t look quite right, but have we ever considered how they make us feel about the world more generally?

Now a visual culture expert at Birmingham City University (BCU) is exploring what happens when images produced by artificial intelligence (AI) go wrong and asking the all-important question – do they have the power to alienate us from the world?

AI image processing models work by finding patterns in data to make predictions. If the data is flawed, they’ll learn incorrect patterns that will skew the predictions – known as hallucinations. This phenomenon can happen in images too and increasingly influences how we see and experience the world.

“AI is a large part of our lives and already controls many of the choices we make and how we interact with reality,” said Dr Anthony Downey, Professor of Visual Culture in the Middle East and North Africa at BCU.

“AI image-processing models don’t experience the world as we do - they replicate a once-removed, distorted depiction of our reality, similar to what we’d experience in a house of mirrors at a funfair.

“It’s therefore critical we find creative ways to not only explore this phenomenon, but to challenge the biases and often flawed predictions that power these technologies.” Professor Downey explains.

Professor Downey recently announced a series of initiatives examining the relationship between AI and art practices, which include a book, peer-reviewed journal publications, and conferences.

His recent book, Adversarially Evolved Hallucinations (Sternberg Press, 2024), produced with internationally acclaimed artist Trevor Paglen, outlines the extent to which hallucinations indicate systemic biases in AI image-processing platforms.

Overviews of the book can be read on the MIT Press Reader and in the Popular Science Magazine, the latter having been a one-time outlet for the writings and ideas of Charles Darwin, Thomas Henry Huxley, Louis Pasteur, Thomas Edison, and many others.

“AI is making huge waves in the creative sector,” Professor Downey added.

“Given the ‘black box’ context of AI – the fact that its internal workings are opaque to users and programmers alike – it’s even more important to explore how art practices can engage with these issues and encourage our students to do the same.

“By teaching students to find new ways to think through AI systems, we’re equipping them with the digital skills and problem-solving aptitude required for a changing jobs market.”

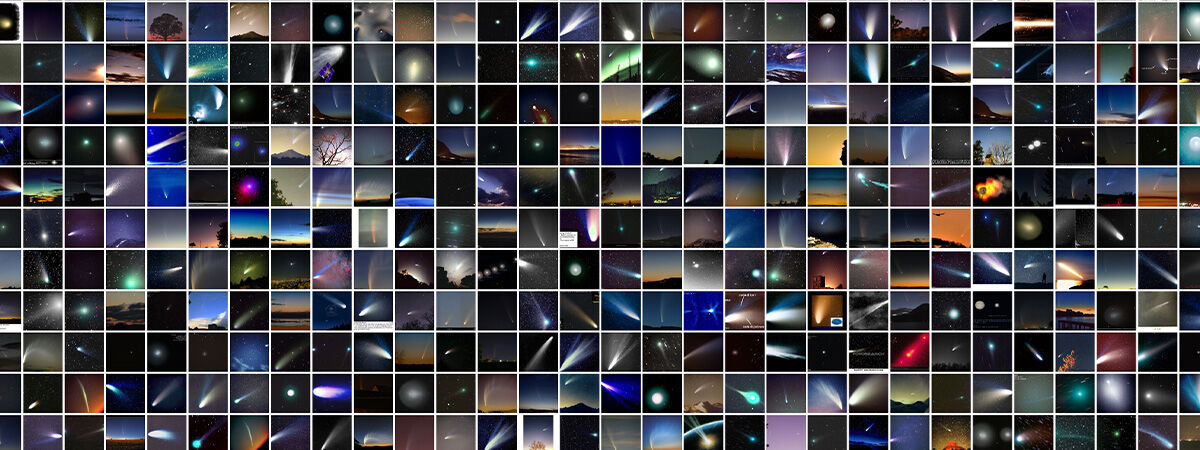

Data Sets: Omens and Portents (Image Courtesy of Trevor Paglen)

In September, Professor Downey announced the first Special Journal Collection on AI and Memory for Memory, Mind & Media (Cambridge University Press). This Collection will publish leading researcher’s working in the field of the humanities, social sciences and Generative AI (GenAI).

His research into how prediction in AI powers and maintains the deployment of autonomous weapons systems(AWS) in contemporary warfare also informed a recent issue of Digital War (Palgrave Macmillan, 2024) and he will publish his overall findings in this field in a book by MIT Press in 2026.

More recently, Anthony delivered a presentation on this subject as part of The Futures of War project at the Imperial War Museum and at the three-day Investigating the Kill Cloud conference in Berlin in November.

In December, he will give a series of lectures on critical AI and contemporary art practices at BCU’s College of Art and Design for staff and students.

Find out more about Anthony’s upcoming projects and ongoing research.